AI Gateway : Connect and securely manage all your LLM Models

Try our AI Plugins

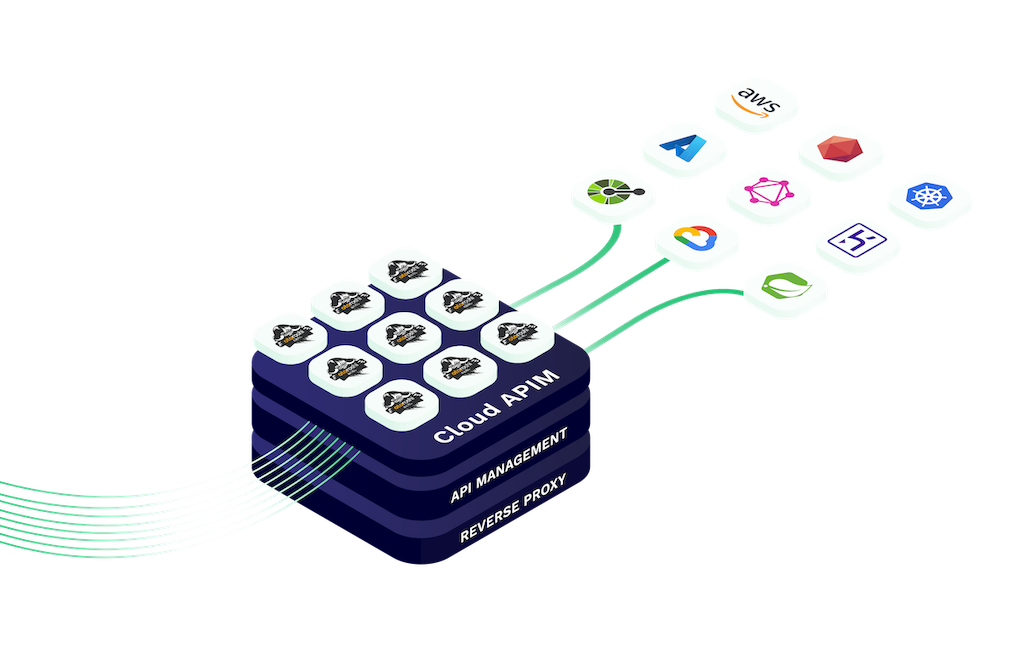

Integrating AI in your API Gateways allows seamless access to machine learning models and AI services.

Our AI Gateway securely manage requests, process data, and deliver AI-powered insights efficiently.

Whether you're incorporating predictive analytics, natural language processing, or other AI functions, Cloud APIM’s AI Gateways make integration easy, scalable, and secure.

The AI Gateway from Cloud APIM empowers developers to easily connect with large language models (LLMs) through a unified, OpenAI-compatible API interface. Whether you're using OpenAI, open-source models, or hybrid deployments, our gateway ensures consistent, secure, and scalable access.

This flexible architecture enables fast deployment, cross-provider integration, and multi-environment support. Built for enterprises and startups alike, it removes vendor lock-in and allows you to route requests based on performance, cost, or geography.

With native support in Otoroshi and full observability features, the AI Gateway is the ultimate foundation for your AI-driven APIs.

aigateway.section1.desc4

Use our all-in-one interface : Simplify interactions and minimize integration hassles

10+ LLM providers supported right now, a lot more coming. Use OpenAI, Azure OpenAI, Ollama, Mistral, Anthropic, Cohere, Gemini, Groq, Huggingface and OVH AI Endpoints

Speed up repeated queries, enhance response times, and reduce costs.

Ensure optimal performance by distributing workloads across multiple providers

Manage LLM tokens quotas per consumer and optimise costs

Every LLM request is audited with details about the consumer, the LLM provider and usage. All those audit events are exportable using multiple methods for further reporting

Join leading developers and enterprises using Cloud APIM to integrate AI into their platforms.

Whether you're building intelligent workflows or scalable chat interfaces, our AI Gateway makes it secure, cost-effective, and lightning fast.

Start your journey toward smarter APIs and better user experiences today.

Cloud APIM’s AI Gateway, powered by the Otoroshi LLM Extension, gives you full visibility and control over the cost of every large language model request.

Easily monitor API usage, generate detailed cost reports per model, and fine-tune your usage to reduce waste and maximize efficiency across all LLM providers.

Cost tracking is enabled by default in the Otoroshi LLM Extension, making it simple to stay on budget while scaling your AI infrastructure securely and smartly.

An AI Gateway is similar to an API Gateway but designed specifically for handling AI or machine learning requests. It manages, routes, and secures AI-based interactions such as LLM calls, ensuring reliable and scalable integration of artificial intelligence in applications.

Cloud APIM AI Plugins are built-in and require no extra setup. You can use them in both our Serverless and Otoroshi Managed environments to quickly integrate AI features into your APIs.

Our AI Gateway supports OpenAI, Azure OpenAI, Ollama, Mistral, Anthropic, Cohere, Gemini, Groq, Huggingface, OVH AI Endpoints, and more. Over 10+ LLM providers are currently supported and new ones are added frequently.

Yes, semantic cache is available in both Otoroshi Managed and Serverless products. It improves response speed for repeated or similar queries, reduces latency, and cuts down on LLM processing costs.

Yes. Our AI Gateway, through the Otoroshi LLM Extension, provides detailed cost tracking for every LLM request. You can generate per-model reports and monitor usage to optimize your AI budget effectively.

Yes, our AI Gateway is fully OpenAI-compatible. You can connect to OpenAI’s API directly or use it alongside other LLM providers in a unified interface with routing, security, and observability.

Absolutely. With our multi-model routing, you can send requests to different LLMs based on rules like cost, performance, or context, making your AI architecture more flexible and optimized.

Semantic caching identifies and stores similar LLM queries to avoid repeated calls. This dramatically reduces the number of expensive model invocations, saving on token usage and improving response times.

Yes. With our AI Gateway, you can define token quotas, request limits, or model usage caps per route, API key, or user — helping you enforce budget limits and optimize LLM costs across teams.

AI Gateways let you route traffic between models based on cost, speed, or purpose. For example, you can use a cheaper open-source model for basic tasks and reserve premium LLMs like GPT-4 for high-value queries.

Yes. The Otoroshi LLM Extension includes detailed analytics. You can view cost per request, generate usage and spend reports by model, and export them for billing or optimization purposes.

With our AI Gateway, you can connect to multiple LLM providers using a unified OpenAI-compatible API. It simplifies model switching, load balancing, and routing through a single secured entry point.