AI Gateway: Connetti e gestisci in sicurezza tutti i tuoi modelli LLM

Prova i nostri Plugin AI

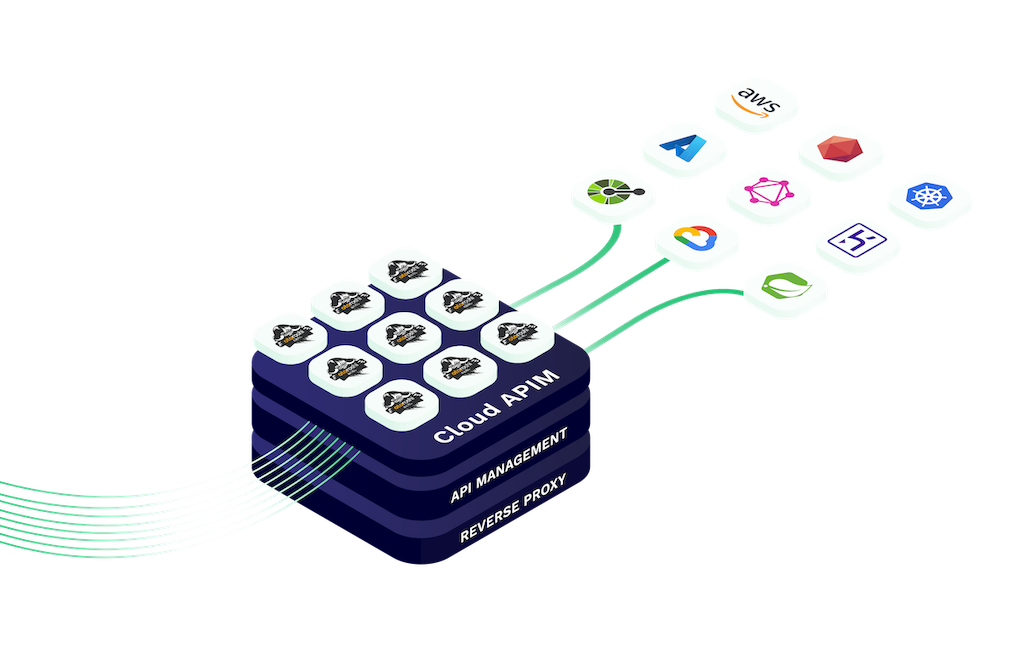

Integrare l'AI nei tuoi gateway API consente un accesso fluido ai modelli di machine learning e ai servizi AI.

Il nostro AI Gateway gestisce in sicurezza le richieste, elabora i dati e fornisce insight basati sullAI in modo efficiente.

Che si tratti di analisi predittiva, NLP o altre funzioni AI, gli AI Gateway di Cloud APIM rendono lintegrazione facile, scalabile e sicura.

L'AI Gateway di Cloud APIM consente agli sviluppatori di connettersi facilmente con i modelli LLM tramite un'interfaccia API unificata e compatibile con OpenAI. Che tu usi OpenAI, modelli open-source o deployment ibridi, il nostro gateway garantisce accesso sicuro, coerente e scalabile.

Questa architettura flessibile consente distribuzioni rapide, integrazione multipiattaforma e supporto multiambiente. Ideale per aziende e startup, elimina il vincolo del fornitore e consente di instradare le richieste in base a prestazioni, costi o geografia.

Con supporto nativo in Otoroshi e funzionalità complete di osservabilità, lAI Gateway è la base definitiva per le tue API basate su AI.

aigateway.section1.desc4

Use our all-in-one interface : Simplify interactions and minimize integration hassles

10+ LLM providers supported right now, a lot more coming. Use OpenAI, Azure OpenAI, Ollama, Mistral, Anthropic, Cohere, Gemini, Groq, Huggingface and OVH AI Endpoints

Speed up repeated queries, enhance response times, and reduce costs.

Ensure optimal performance by distributing workloads across multiple providers

Manage LLM tokens quotas per consumer and optimise costs

Every LLM request is audited with details about the consumer, the LLM provider and usage. All those audit events are exportable using multiple methods for further reporting

Unisciti agli sviluppatori e aziende leader che usano Cloud APIM per integrare l'AI nelle loro piattaforme.

Che tu stia creando flussi intelligenti o interfacce chat scalabili, il nostro AI Gateway li rende sicuri, economici e velocissimi.

Inizia oggi il tuo percorso verso API più intelligenti e migliori esperienze utente.

L'AI Gateway di Cloud APIM, potenziato dall'estensione Otoroshi LLM, ti offre visibilità e controllo totale sui costi di ogni richiesta LLM.

Monitora facilmente l'utilizzo API, genera report dettagliati per modello e ottimizza l'utilizzo per ridurre sprechi e massimizzare l'efficienza.

Il monitoraggio dei costi è attivo di default nell'estensione Otoroshi LLM, semplificando la gestione del budget durante la scalabilità della tua infrastruttura AI.

An AI Gateway is similar to an API Gateway but designed specifically for handling AI or machine learning requests. It manages, routes, and secures AI-based interactions such as LLM calls, ensuring reliable and scalable integration of artificial intelligence in applications.

Cloud APIM AI Plugins are built-in and require no extra setup. You can use them in both our Serverless and Otoroshi Managed environments to quickly integrate AI features into your APIs.

Our AI Gateway supports OpenAI, Azure OpenAI, Ollama, Mistral, Anthropic, Cohere, Gemini, Groq, Huggingface, OVH AI Endpoints, and more. Over 10+ LLM providers are currently supported and new ones are added frequently.

Yes, semantic cache is available in both Otoroshi Managed and Serverless products. It improves response speed for repeated or similar queries, reduces latency, and cuts down on LLM processing costs.

Yes. Our AI Gateway, through the Otoroshi LLM Extension, provides detailed cost tracking for every LLM request. You can generate per-model reports and monitor usage to optimize your AI budget effectively.

Yes, our AI Gateway is fully OpenAI-compatible. You can connect to OpenAI’s API directly or use it alongside other LLM providers in a unified interface with routing, security, and observability.

Absolutely. With our multi-model routing, you can send requests to different LLMs based on rules like cost, performance, or context, making your AI architecture more flexible and optimized.

Semantic caching identifies and stores similar LLM queries to avoid repeated calls. This dramatically reduces the number of expensive model invocations, saving on token usage and improving response times.

Yes. With our AI Gateway, you can define token quotas, request limits, or model usage caps per route, API key, or user — helping you enforce budget limits and optimize LLM costs across teams.

AI Gateways let you route traffic between models based on cost, speed, or purpose. For example, you can use a cheaper open-source model for basic tasks and reserve premium LLMs like GPT-4 for high-value queries.

Yes. The Otoroshi LLM Extension includes detailed analytics. You can view cost per request, generate usage and spend reports by model, and export them for billing or optimization purposes.

With our AI Gateway, you can connect to multiple LLM providers using a unified OpenAI-compatible API. It simplifies model switching, load balancing, and routing through a single secured entry point.