Passerelle IA : Connectez et gérez en toute sécurité tous vos modèles LLM

Essayer nos plugins IA

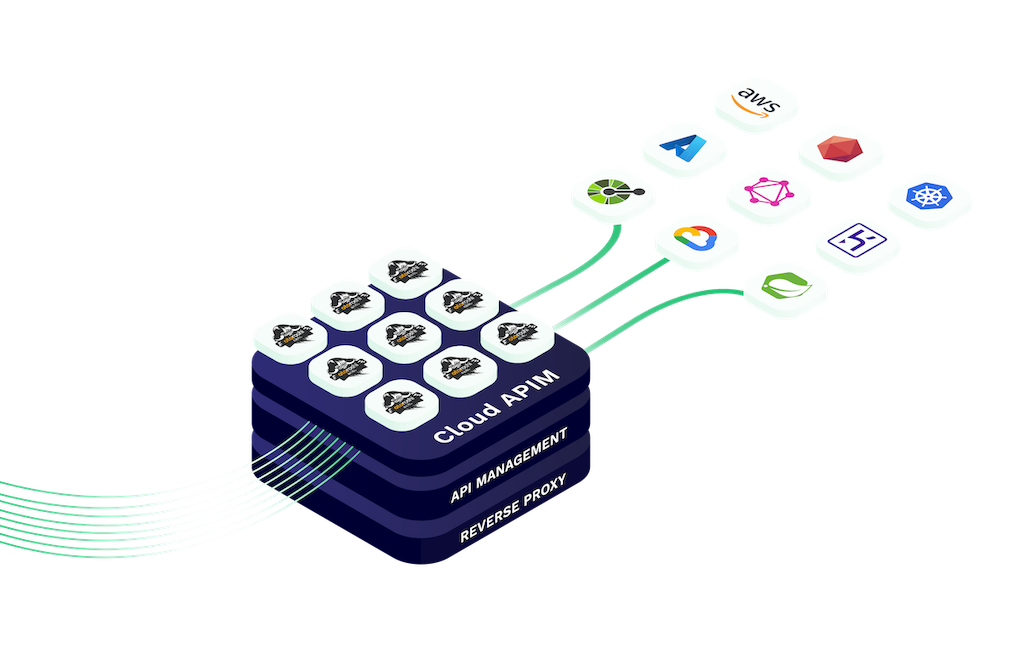

L'intégration de l'IA dans vos passerelles APIs permet un accès transparent aux modèles de machine learning et aux services d'IA.

Notre passerelle IA gère les requêtes, traite les données et fournit efficacement des informations basées sur l'IA.

Que vous intégriez des analyses prédictives, le traitement du langage naturel ou d'autres fonctions d'IA, les passerelles IA de Cloud APIM rendent l'intégration facile, évolutive et sécurisée.

La passerelle IA de Cloud APIM permet aux développeurs de se connecter facilement à de grands modèles de langage (LLM) via une interface API unifiée et compatible OpenAI.

Que vous utilisiez OpenAI, des modèles open source ou des déploiements hybrides, notre passerelle garantit un accès cohérent, sécurisé et évolutif.

Cette architecture flexible permet un déploiement rapide, une intégration multi-fournisseurs et une prise en charge multi-environnements. Conçue pour les entreprises comme pour les startups, elle supprime la dépendance vis-à-vis d'un fournisseur et vous permet d'acheminer les requêtes en fonction des performances, des coûts ou de la géographie.

Avec une prise en charge native d'Otoroshi et des fonctionnalités d'observabilité complètes, la passerelle IA est la base idéale pour vos API pilotées par l'IA.

Utilisez notre interface tout-en-un : simplifiez les interactions et réduisez les difficultés d’intégration

Plus de 10 fournisseurs de LLM déjà supportés, et bien d’autres à venir. Utilisez OpenAI, Azure OpenAI, Ollama, Mistral, Anthropic, Cohere, Gemini, Groq, Huggingface et OVH AI Endpoints

Accélérez les requêtes répétées, améliorez les temps de réponse et réduisez les coûts.

Assurez des performances optimales en répartissant les charges de travail entre plusieurs fournisseurs

Gérez les quotas de jetons LLM par consommateur et optimisez les coûts

Chaque requête LLM est auditée avec des détails sur le consommateur, le fournisseur LLM et l’utilisation. Tous ces événements d’audit sont exportables par différents moyens pour un reporting avancé

Rejoignez les principaux développeurs et entreprises utilisant Cloud APIM pour intégrer l'IA à leurs plateformes.

Que vous créiez des workflows intelligents ou des interfaces de chat évolutives, notre passerelle IA vous offre sécurité, rentabilité et rapidité.

Lancez-vous dès aujourd'hui vers des APIs plus intelligentes et une expérience utilisateur optimisée.

La passerellle IA de Cloud APIM, optimisée par l'extension Otoroshi LLM, vous offre une visibilité et un contrôle complets sur le coût de chaque demande de modèle de langage volumineux.

Surveillez facilement l'utilisation des API, générez des rapports de coûts détaillés par modèle et ajustez votre utilisation pour réduire le gaspillage et maximiser l'efficacité de tous les fournisseurs LLM.

Le suivi des coûts est activé par défaut dans l'extension Otoroshi LLM, ce qui simplifie le respect du budget tout en faisant évoluer votre infrastructure d'IA de manière sécurisée et intelligente.

Une Passerelle IA est similaire à une Passerelle API (API Gateway), mais conçue spécifiquement pour gérer les requêtes d’IA ou d’apprentissage automatique. Elle gère, achemine et sécurise les interactions basées sur l’IA, telles que les appels LLM, assurant une intégration fiable et évolutive de l’intelligence artificielle dans les applications.

Les plugins IA de Cloud APIM sont intégrés et ne nécessitent aucune configuration supplémentaire. Vous pouvez les utiliser dans nos environnements Serverless et Otoroshi Managed pour intégrer rapidement des fonctionnalités d’IA à vos APIs.

Notre AI Gateway prend en charge OpenAI, Azure OpenAI, Ollama, Mistral, Anthropic, Cohere, Gemini, Groq, Huggingface, OVH AI Endpoints, et bien d’autres. Plus de 10 fournisseurs LLM sont actuellement supportés et de nouveaux sont régulièrement ajoutés.

Oui, la mise en cache sémantique est disponible dans les produits Otoroshi Managed et Serverless. Elle améliore la rapidité des réponses pour les requêtes répétées ou similaires, réduit la latence et diminue les coûts de traitement LLM.

Oui. Notre Passerelle IA, via l’extension Otoroshi LLM, fournit un suivi détaillé des coûts pour chaque requête LLM. Vous pouvez générer des rapports par modèle et surveiller l’utilisation pour optimiser efficacement votre budget IA.

Oui, notre AI Gateway est entièrement compatible avec OpenAI. Vous pouvez vous connecter directement à l’API OpenAI ou l’utiliser avec d’autres fournisseurs LLM via une interface unifiée avec routage, sécurité et observabilité.

Absolument. Grâce à notre routage multi-modèles, vous pouvez envoyer des requêtes vers différents LLM selon des règles comme le coût, la performance ou le contexte, rendant votre architecture IA plus flexible et optimisée.

La mise en cache sémantique identifie et stocke les requêtes LLM similaires pour éviter les appels répétés. Cela réduit considérablement le nombre d’invocations coûteuses de modèles, économise des jetons et améliore les temps de réponse.

Oui. Avec notre AI Gateway, vous pouvez définir des quotas de jetons, des limites de requêtes ou des plafonds d’utilisation de modèles par route, clé API ou utilisateur — ce qui vous aide à respecter votre budget et à optimiser les coûts LLM pour vos équipes.

Les passerelles IA vous permettent de router le trafic entre modèles selon le coût, la rapidité ou l’objectif. Par exemple, vous pouvez utiliser un modèle open source moins cher pour les tâches basiques et réserver des LLM premium comme GPT-4 pour les requêtes à forte valeur ajoutée.

Oui. L’extension Otoroshi LLM inclut des analyses détaillées. Vous pouvez visualiser le coût par requête, générer des rapports d’utilisation et de dépenses par modèle, et les exporter pour la facturation ou l’optimisation.

Avec notre passerelle IA, vous pouvez vous connecter à plusieurs fournisseurs LLM via une API compatible OpenAI unifiée. Cela simplifie le changement de modèle, l’équilibrage de charge et le routage via un point d’entrée sécurisé unique.